Everyone is trying to create amazing content, build links, and care about on-page SEO and off-page SEO factors.

But there is another part that most people are ignoring.

It’s the Technical SEO.

Technical SEO is the most underestimated part of SEO. But it plays a great role nowadays, and you should consider this also.

In this post, you will learn about the Technical SEO factors. You will also discover how you can improve your ranking with the help of Technical SEO.

Technical SEO Factors 2025

Technical SEO refers to all the SEO works beyond content. There are several factors to consider when optimizing a site. These factors are based on Technical SEO.

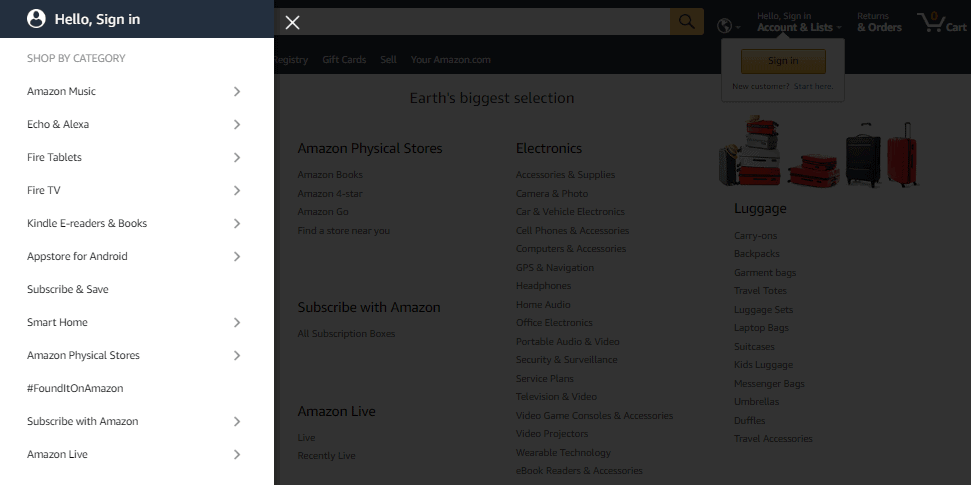

1. Website Architecture

It is one of the most important things when it comes to Technical SEO.

Website Architecture states how a website’s pages are structured and interlinked to one another.

A well-structured architecture helps users and Search Engine crawlers. They can easily find what they’re looking for and easily navigate inside a website.

Flat site architecture is always better for SEO. A flat architecture means that one can reach any page on your website in 4 clicks or less.

On the other hand, deep architecture means a page that can take 4-10 more clicks to reach. This type of site is critical for Google bots and users.

If you are running a small blog, then you can categorize all the blog posts through categories. You can list all the categories on the home page.

For reference, just head to eCommerce websites like Amazon and Best Buy. They have thousands of web pages in different categories. All of their categories have internal links from their homepage.

Again all the categories are divided into subcategories. So one can easily navigate throughout the site.

Benefits of Well Structured Website Architecture

1. Better Indexing:

Search Engine spiders find all the pages of your website through the structure. They index all of the pages on your website.

Your site structure is complicated. If you are far away from the home page, then Google bots will face difficulty finding your pages. So bots miss some of your important pages as they are far away.

If your site has good interlinking and hierarchy structure, then Google bots can easily crawl those pages. They can index them all.

2. Advantage of Page Rank:

Internal links from high-value pages of your site can help to improve overall authority.

Let’s come to the user side. A structured website is also helpful for users. When a user lands on a site, they notice that all the pages are well organized. As a result, they want to spend more time on it. This organization encourages more engagement.

More time on site have a positive impact on overall SEO. It sends Google a good signal that the site is trustworthy that’s why visitors are spending more time on it.

Google also watching closely the user signals more than ever. So rather than spending more time on On-Page and Off-Page SEO factors, try to make a well-structured site.

2. XML Sitemaps

A sitemap is also important for crawling and indexing. It is like a blueprint for your website. It helps Search Engine crawlers find, crawl, and index your web pages.

Sitemaps also instruct Search Engines which pages on your website are most important and crawl them.

Various Types of Sitemaps:

- Normal XML Sitemap: This is the most common type of sitemap. It is a form of an XML Sitemap that has links to various pages on your website.

- News Sitemap: This type of sitemap helps Google bots find different pages on sites that are approved for Google News.

- Image Sitemap: Helps Google bots to find all of the images listed on your site. This helps to show images on Google Image search.

- Video Sitemap: It is a special sitemap that helps Google understand the video content on your website.

The journey starts with creating a sitemap. No matter what website you are running, you can create a sitemap for it.

If you are using WordPress then it would be very easy for you to create a sitemap. You have probably heard about Yoast SEO before. This is the best SEO plugin for WordPress having 5 million-plus active installations.

The best part of Yoast SEO is, that it creates and updates sitemaps automatically. As you can say it is a dynamic sitemap. Make sure you have installed the plugin and enabled the sitemap option on the settings.

There are many options that you can add to your sitemap. You can choose which pages you want to be in the sitemap. For a normal blog, you can choose posts and pages to be shown on the sitemap.

Whenever you add a new page or post to your site, that page is automatically added to your sitemap file.

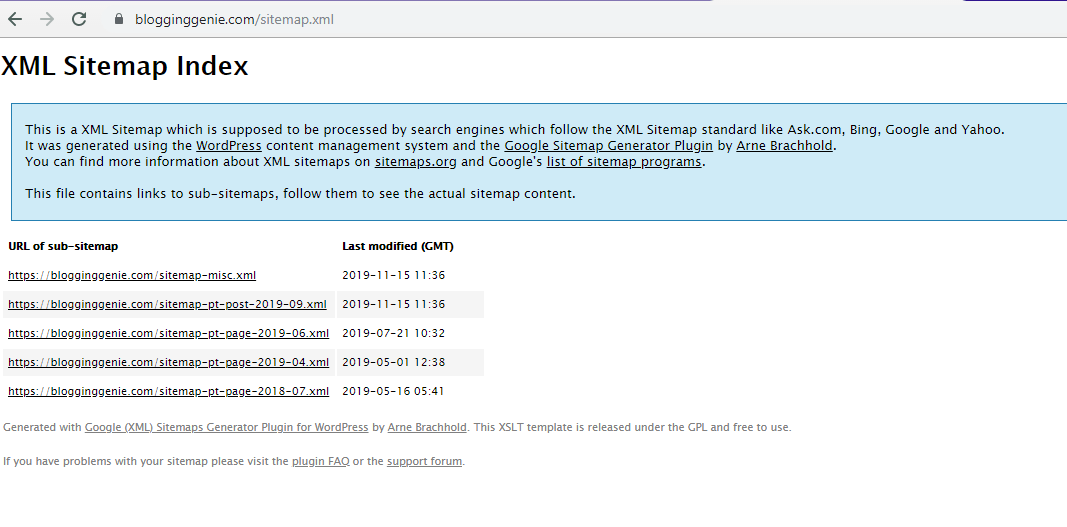

Yoast SEO sitemap URL is generally like this: https://yoursite.com/sitemap_index.xml

Have a look at our sitemap.

They’re also a sub-section on the sitemap. You will find them there as you have chosen in the setting.

If you are not using Yoast SEO, then there are a lot of XML sitemap plugins available there.

One of the best plugins is Google XML Sitemaps. It also generates and updates the sitemap automatically.

Not using WordPress, don’t worry.

There are some websites that help you to generate a sitemap. You can use XML-Sitemaps.com for this purpose.

Although this process is manual. You have to repeat the step every time when you add a new page or post.

Once you are done with the sitemap, then it’s time to check it once. If everything looks good on the sitemap, then it’s time to submit it to the Google Search Console.

Steps to Submit Sitemap To Google Search Console:

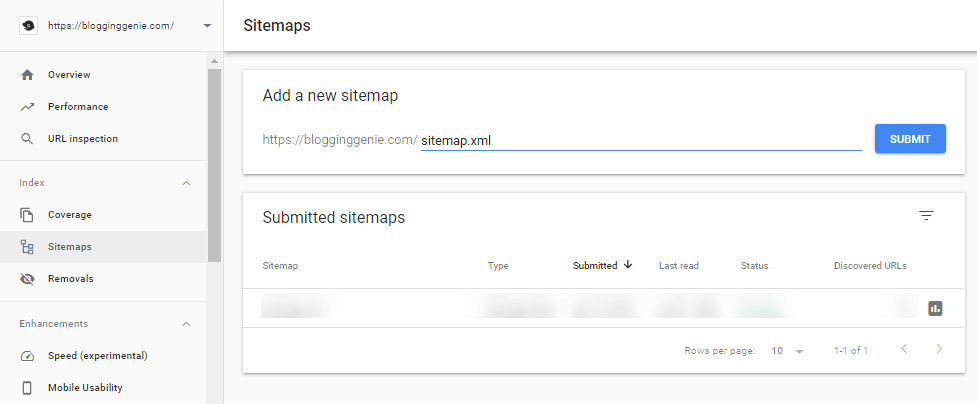

1. Log in to your Google Search Console account. Then, go to “Index” → “Sitemaps” in the left sidebar. If you have submitted the sitemap before, then it would show you that.

2. If not then, click on the Add new Sitemap and place your sitemap URL there. Then submit it. Now you will see the submitted sitemap.

If you are using Yoast SEO, then once you submit the sitemap, it will be automatically updated with new pages. This happens each time you add a new page. If you have done this manually, then you must repeat the process. You will need to do this every time you add a new post.

3. After successfully crawling by Google, you would see a message “Sitemap index processed successfully”.

4. Then go to the Coverage Report on your sitemap. The coverage Report will show you the valid and error pages.

One thing to check is the Excluded pages. Check those pages and also check the error messages. If you see any message like this, “Duplicate, submitted URL not selected as canonical,” then delete those pages. Remove them from your sitemap.

After resolving the errors on the index report, you are good to go.

3. Page Speed

One second might not seem like a lot, but it has a huge impact on your website.

This means you have no option other than to speed up your website.

Page speed is a very technical concept when it comes to SEO. Understanding this might have a huge impact on your SEO efforts.

“Page Speed” isn’t as easy as it sounds because there are many aspects of measuring page speed.

According to some studies, a one-second delay in load time leads to:

- 7% Loss in Conversions

- 11% Fewer Page Views

- 16% Decrease in Customer Satisfaction

- 20% Increase in Bounce Rate

Mainly, there are three common components:

1. Fully Loaded Page: This measures how long it takes to load 100% of the resources on a page. This is the most common and straightforward way to measure page speed. This is also a non-tech way to determine how fast a page loads.

2. Time to First Byte: This measures how long it takes to start the page loading process. You have seen the white screen before loading any page. That is actually when the Time to First Byte process starts. It happens in a fraction of a seconds. So one might not notice it.

3. First Meaningful Paint/First Contextual Paint: This measures how long it takes to load some viewable components on a page.

How to Measure Page Loading Speed?

Head to the Google PageSpeed Insight tool and check the metrics of your site. You can also measure site speed through GTmetrix and Pingdom.

Industry-standard says that your page should load in under 3 seconds.

Quick Ways To Speed Up Your Website

There are various ways to speed up your website.

1. Minimize HTTP Requests:

Most of the time is spent downloading the different parts of a page. They use HTTP requests and each component has different requests.

So reducing HTTP requests can boost your overall loading speed. You can use the Google Chrome browser’s Developer Tools to check the number of requests.

Look through your files and remove the unnecessary ones.

2. Browser Caching:

Browser caching is one of the easiest ways to speed up a slow website. Caching allows you to store parts of your web page in a visitor’s browser.

When someone visits a page, some parts of your website are turned into a cache and stored in the browser. It feels like the user is a fast-loading site.

There are many types of browser caching and differ from plugin to plugin. If you are using WordPress then you can use W3 Total Cache for caching. WP Rocket is also a good paid option.

3. Choose Fast Hosting:

Hosting also plays a huge role in the speed and performance of a website. People often neglect it and choose the cheapest hosting.

Hosting providers like SiteGround provide good hosting services and they have fast servers. So, when you are choosing to host, don’t go for a cheap one. Go for a fast (and overall best) one.

4. Compress Images:

Images are the heaviest component of a webpage. It also takes the most time to load. So compressing images has a huge impact on page loading.

If you are using WordPress then you can use WP Smush and it compresses all the images when uploading. You can also compress all the previous images. Otherwise, you can use image compression websites.

Another thing you can do is lazy load images. This technique forces images to load when someone scrolls to the section. Some caching plugins have this feature. Otherwise, you can use the Lazy Load by WP Rocket plugin for this purpose.

5. Use a CDN:

This is also the easiest way to speed up your site’s loading. In typical hosting, all the visitor’s request is served by the same server. In high-traffic situations, this might take a long time to process the requests.

CDN acts smartly and hosts your website files globally. Whenever someone visits your website, it serves the files from nearby servers.

6. Enable Compression:

Compressing your files would be a good choice. You can compress your files to Gzip format. This works well with your existing CSS and HTML because these files repeat code and whitespace. But Gzip is a file format that has a smaller size.

If you don’t have Gzip compression enabled, then you’ll want to fix this as soon as possible. If you are using WordPress, then caching plugins like WP Rocket and W3 Total Cache plugins support Gzip compression options.

Note: Before jumping into speed up your website, run speed tests in various tools that we have mentioned above. Take a screenshot of it. Then apply the techniques and again run speed tests. Compare both of them and you will see the difference.

Speeding up your website is not an option anymore. This is a vital necessity now.

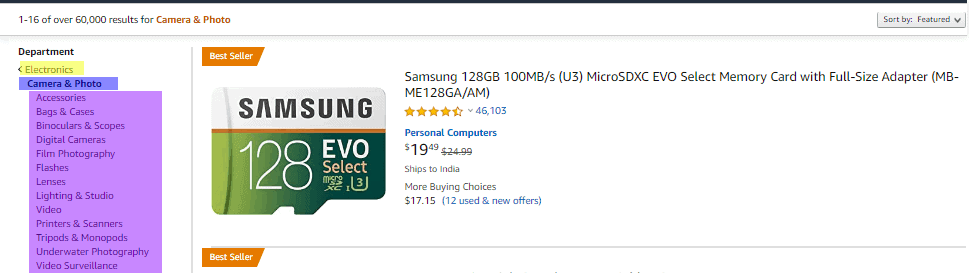

4. Crawl Budget For SEO

Have you heard about the crawl budget before? If your answer is no, then I would not be surprised. Because very few people have an idea about it. No one talks about it that’s why it is still untapped.

Crawl Budget refers to the number of pages crawls and indexes on a website by Googlebot within a given timeframe.

Then you might be thinking about why Crawl Budget is important. In short, if Google does not crawl your site, how it would be placed in the search results?

So if your total number of pages exceeds the Crawl Budget, then how it would be crawled and indexed?

If you are running a blog or a small website then you don’t need to worry about this. Google has awesome algorithms to crawl and index sites.

If you have a big website (like an eCommerce site), this applies to you. It is particularly relevant if your site has thousands of pages. Google might take time to find all those pages.

Have you added a new section to your existing website? If yes then this crawl budget concept is also for you. You need to make sure that you have enough crawl budget to index them all quickly.

Lots of redirects can also ruin your crawl budget. Google bots will face difficulty in finding the redirected pages in the given time.

To increase the crawl budget of your website, you can do the following things.

1. Improve your site speed and indirectly it leads to Googlebot crawling more. Slow-loading pages leads to the reduction of valuable Googlebot time. As the crawl budget is limited for each site, you need to utilize it correctly.

2. Internal linking is also a good practice to increase the crawl budget. Google bots can easily communicate through it.

3. Well-organized site architecture is also helpful for this purpose. Bots can easily navigate through the hierarchy structure.

4. Duplicate content is another thing that affects it. So delete those duplicate pages. Google doesn’t want to waste its resources to crawl the same content again and again.

If you want to optimize your crawl budget, follow the above tips. You will get the most out of it.

5. HTTPS Necessity

HTTPS (Hypertext Transport Protocol Security) refers to a secure website. When you install an SSL Certificate on a web server, it activates the padlock and the HTTPS protocol. It allows securing connections from a web server to a browser.

Google has added HTTPS to its ranking factors. So it always encourages webmasters to migrate their site from a normal version to a secure version.

Moreover, the Google Chrome browser now shows a not secure warning if it doesn’t have HTTPS. This has a very bad impact on the trust of your website. Visitors feel spammy and they are more likely to exit your site.

According to a global survey, 84% of people don’t purchase from a site if it has an insecure connection, and 82% of people don’t want to browse an unsecured website at all.

Some studies found that there is a moderate correlation between HTTPS and higher search rankings. Other studies also found a slight correlation as well.

Many hosting providers give a free SSL certificate with the hosting plan. So if you are one of them then you can enjoy it for free.

If you have not, then you can also buy one and install it on your server.

If you are using WordPress then you can get a free SSL certificate through some plugins. You can use Simple SSL for this purpose.

If you are putting so much effort into other SEO factors, your site must be secure. Otherwise, all the efforts will go to the tank.

So, consider installing an SSL certificate and making your site secure, and take advantage of HTTPS.

6. Mobile Friendly

Mobile search is now skyrocketing. The number of searches from mobile devices is now at the topmost level. For the first time, mobile searches beat desktop searches.

So you need to optimize your site for mobile users as well. No matter what industry you’re in or what type of websites you have, your site needs to accommodate mobile users.

Most of the modern themes nowadays have responsive designs. The website resizes automatically according to screen size.

You can also test your site’s mobile-friendliness through some tools. One of the best tools you can use is the Google Mobile-friendly test.

Here’s what it looks like:

If your site is mobile-friendly through this tool, then you are good to go.

Rather than just optimizing the site through responsive themes, you can go beyond.

Avoid pop-ups on your mobile site. Pop-ups are good for desktop versions but not for mobile devices. It seems annoying on mobile devices. If you need it, then make it accessible and add a small “X” button to close a pop-up.

The menu is an important factor in optimizing mobile devices. As the mobile screen is small compared to desktop and laptop screens, you need to place the menu inside that.

You may go to the mobile-specific website. This is the highest level you can adopt. These types of websites are specially designed for mobile devices.

When a user visits your website through the desktop, it shows the desktop version of the site. When someone visits from a mobile device, it shows the mobile version of the same website.

This is not a responsive design. The mobile-specific sites are specially designed to fit mobile devices.

Reduce the amount of text that appears on a screen once. A long paragraph of text having hundreds of words may seem good on large-screen devices. But it is very boring with mobile devices.

So write small paragraphs of 2-3 sentences maximum. This can help visitors to scan through your websites on desktop devices and smartphones as well.

Speed also matters for mobile-optimized sites. We have discussed many things about website speed optimization earlier in this article. Therefore, no need to mention it here again.

7. Duplicate Content

Duplicate content is a similar piece of content. It appears on other websites or various pages on the same website.

Google hates duplicate content and strongly prohibits doing this. If you have duplicate content on your website, then you can face some issues.

The first issue would be very little organic traffic. Google doesn’t want to index copied content from other websites. As per Google’s terms, they always want to rank high-quality and unique information.

In some rare cases, this issue can lead to a Google Penalty. This happens when someone exactly clones a website.

Google doesn’t index duplicate pages. If you have some duplicate pages then you will face less indexing. If they found any duplicate issue at the time of crawling, they simply didn’t add to the index.

You can use some third-party tools like SiteLiner to check the duplicate pages. Just paste your website URL and it shows you the pages having the same issues.

To avoid these types of issues, you can follow the following tips:

1. Create your own content and don’t copy-paste from other websites.

2. E-Commerce websites can have serious duplicate content. As they have thousands of URLs and similar products. So diversify your URLs and avoid duplicate issues.

3. Make sure that there is one version of your website. WWW or non-WWW. Sometimes there are two different versions of the same site. Make sure that you have proper redirection from the other version.

4. Check the Google Search Console’s indexing report. It shows you the indexed and error pages. If you found any duplicate content errors there, then either delete them or redirect them.

5. Use the Canonical tag. The HTML form of this tag looks like this:

rel=canonical

This tag tells search engines that the marked page is original and ignores the rest. E-Commerce websites use this tag mostly.

Another best practice is to Noindex tags and category pages. If you are using WordPress, then it generates tags and category pages automatically. These pages have the highest potential for duplicate content. You can simply Noindex to those pages to avoid this type of issue.

The duplicate content issue is one of the areas where you have to take care of.

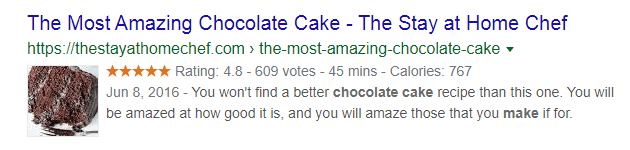

8. Schema Markup

Schema Markup is a coding standard given by Schema.org (often called Schema). It is a semantic vocabulary of tags (or packets of microdata). You can add this tag to your existing HTML code to improve the search engine ranking.

Schema.org collaborated with the major search engines to help better understand the data on a webpage.

Schema Markup is a topic for a long discussion. As of now, there is no strong evidence that this markup improves rankings. It can add some more information to your page. A search engine can identify what the content is all about.

You may have seen start ratings on search results sometimes. This is an example of Schema Markup.

If you are using WordPress, then there are many plugins that can enable this feature in your post. According to your niche and the type of content, you can add them accordingly.

One of the best plugins is the Schema – All In One Schema Rich Snippets. There are various data types in this plugin.

If you are not using WordPress then you can go with Google’s Structured Data Markup Helper. This tool will help you to generate custom codes for your website. Choose the data type in the list and it will generate code for your web page.

9. Robots.txt

Robots.txt is a file that contains some instructions for search engine spiders. It tells them not to crawl some pages or sections of a website. Major search engines like Google, and Bing can recognize robots.txt instructions and index them according to that.

Benefits of Having robots.txt File

1. Block Search Engines From Certain Pages: Some pages or portions of the website should not be indexed. You may not want these to appear in search results. The pages like a landing page, login page, or staging version of your website should not be on the index.

So you can add them to the robots.txt file and search engine crawlers and bots will not index them.

2. Maximize Crawl Budget: We have discussed the Crawl budget earlier in this article. robots.txt file helps you to maximize your crawl budget. It limits the number of pages to be indexed. So search engine crawlers will have more time to spend on the pages that matter to you.

There is a specific format on this file.

User-agent: X Disallow: Y

User-agent is specific to the bot that you’re talking to like “Googlebot”

“Disallow” is the section. It contains information about the pages or sections of your website that you want to block.

For example,

User-agent: Googlebot Disallow: /tag/

This rule would tell Googlebot to not index the tag folder of your website.

You can also replace Googlebot with an asterisk (*) sign. This refers to all search engine bots.

User-agent: * Disallow: /tag/

There is a whole list of rules defined to customize your text file. You can a detailed guide on the robots.txt file by Google.

The recommended way to place the file is in the root directory.

The URL structure should be: https://yourdomain.com/robots.txt

Note: robots.txt file name is case sensitive. So always use the same name in lowercase.

I have seen some worst cases where people accidentally block useful pages. I heard that some people also block search crawlers from their entire site by mistake.

Luckily, Google has a handy tool to test your robots.txt file. Put your code in the Robots Testing Tool and it will show you any errors and warnings.

If you found any of them, then you need to correct that one and place it on your site.

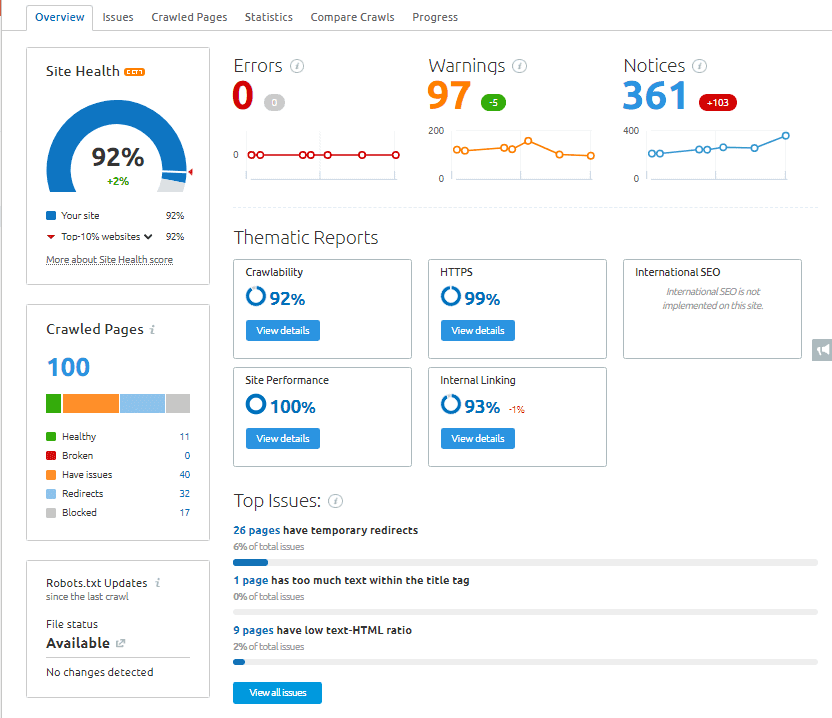

10. In-Depth Technical SEO Audit

Now it’s time to do an in-depth technical SEO Audit. You might be wondering how to perform a site audit.

Well, you can use free or paid tools for this purpose. Although one tool might not come in handy in all aspects, you can combine them to get better results.

Some of the best free tools for this purpose are:

- Google Search Console

- Google Page Speed Insights

- Screaming Frog SEO Spider

Some of the paid tools are:

- SEMrush

- Ahrefs

- Deep Crawl

This type of tool saves a lot of time. You have a clear vision of what you have to audit. By modifying the issues, your site would be ready for ranking.

Conclusion

So these are some of the important factors you need to consider when you are performing technical SEO.

Like other SEO works, perform this one also at regular intervals. If you are consistent with it, then you can expect a better ranking.